S.T.R.E.A.M.

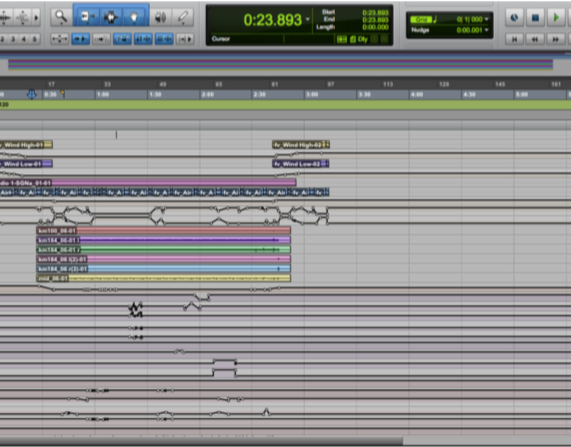

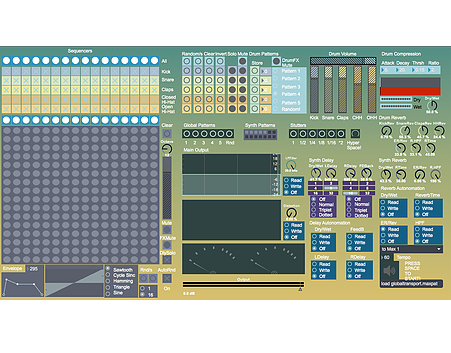

Due to the ever-growing schism that seems to pervade the audio-visual post-production environment, this project caters to some of the needs of the audio figures in this field, providing them with a tool that creates the possibility to connect their world to the one of the image. In order to investigate the implications of such eventuality, I have created a program using Max/MSP. Starting with the audio of a soundtrack, the system creates musical ambiences and soundscapes, adding a new layer of sound that resides in its own dimension. The very nature of this layer is uncertain and deeply related to the video. In fact, S.T.R.E.A.M.’s output can be sonically altered by the properties of the video, basing some of its settings on the video’s RGB channels and Luminosity. This way, the system creates a musical ambience which can be intended as a bridge, not only between the various different families of sounds present in a movie, but also between the audio and the video components. Several examples generated by the program are included in the present research in order to exemplify some of the possible usages. Previous works such as early studies from the 1930s, film soundtracks and digital projects, have inspired this project, which furthers concepts introduced by Benjamine Fondane, Brian Eno and Larry Sider. S.T.R.E.A.M. responds to the expansion of the growing nexus of Sound Design, Composition and Audio Engineering offering these figures a tool to help them create and process audio content, or simply to inspire them. The birth and evolution of the project has been documented in https://branded.me/ferdinandovalsecchi/posts The system is made of four feedback engines, composed themselves of feedback loops and effects. The engines' parameters react to the changes in the video (RGB channels and Luminosity of the image) in realtime. The audio produced by the engines is directly related to the pre-existent audio of the video, since it is created by the feedback-loops thanks to the fed input.